Not long ago I was asked to join an online discussion group that was formed around the book Traditional Oil Painting, Advanced Techniques and Concepts from the Renaissance to the Present by artist and author Virgil Elliot. Upon joining, I purchased the book that inspired the group so that my contributions to the forum’s discussions could be framed in the context of the group’s focus.

I did not get too far into the book before I began to encounter a number of claims regarding the nature of visual perception that simply did not accord with a modern understanding of the subject. For example, the author makes a series of rather bold claims on page 19 of his text regarding the role of perception in the context of visual art. He writes, “The artist must develop the ability to read all things with total objectivity in order to see the truth. This ability is what distinguishes the true artist from everyone else. The perceptions of others are influenced by irrelevancies. Those of the true artists are not. As artists, we must see things as they really are.” Later, The author further doubles down on these claims on the same page writing, “Total accuracy and objectivity of perception are achieved only after considerable study,” and “Consequences notwithstanding, the ability to see with total objectivity is essential if one is to create great art.”

Tucking away these comments, for the time being, I continued on reading–hoping the ideas would not resurface in any significant way. But lo, it was only a few dozen pages later, in a section of the book titled “The Photographic Image Versus Visual Reality,” that the claims were revisited in the context of the good ol’ “photography-as-a-valid-tool-for-contemporary-painting” argument. This section in the book compared the human eye with a camera in a myriad of ways so as to lead the viewer to conclude that photography is a problematic, distorted source of visual information (as compared with the cultivable objectivity to be found with human perception.) It was here that I realized that the author’s argument against photography as a viable tool for observational representationalism hinged on the reality of objective perception. (Keep this in mind.)

With these claims regarding the nature of perception and the usefulness of photography in the context of observational representationalism put forward so unapologetically in the book, it should come as no surprise that these ideas could be found peppered throughout the related online discussion group. Recently, I decided to respond to these ideas in a post of my own. My intention was to address these assertions about perception, photography, and observational representationalism while also addressing related claims involving the idea of “slavish copying.” I wrote:

“I have been reading through a thread here about the contemporary representational painter’s use of photography on this forum and I must say that I’m shocked to see so many fallacies and misrepresentations allowed to propagate unchecked. I paint, colloquially “from” life, from photographs, as well as from my imagination. Do you know what the actual case is regarding each scenario? It is that I am painting “from” memory. Each scenario involves the informing and sculpting of behaviors with a vast array of memory resources that are cultivated by visual experience. Yes, the type or mode of reference source can indeed impact behaviors very differently over a wide array of aspects—and those differences can indeed be illuminated thoughtfully—but the differences often put forward today are often fallacy-ridden arguments fueled by irrational dogmatic beliefs.

The argument that the products of photography are somehow a “lesser” form of visual reference source DUE TO the fact that a photograph does not represent “reality” is indeed fallacious as your actual vision system does not provide an “accurate” representation of what we would describe as reality. We’ve known this since the early 1700s. Can an argument be made that even the best possible photograph (surrogate) may provide far less information than the corresponding live percept? Yes—without a doubt. Does either represent actual “reality?” No.

Furthermore, while a photograph and a live percept may produce very different perceptual experiences—“slavish” copying is technically impossible in both scenarios as visual perception is not, in any way, a strictly linear bottom-up translation of physical measurement. The idea could not be any more erroneous.

For those that have followed my writings in the past, the content of the above post is nothing new. In fact, I had visited this topic in 2015 with a paper titled “The “Pitfalls” of reading about Photography “Pitfalls” after reading a similarly titled article, “How to Avoid the Pitfalls of Painting from Photographs” by Courtney Jordan. I again approached the subject of photography again in my writing in the Summer of 2017 with an article titled, “Color, the Pitfalls of Intuition, and the Magic of a Potato.“ The 2015 article directly addressed many of the core arguments in this ongoing “photography-as-a-valid-tool-for-contemporary-painting” debate. The 2017 article is more focused on the nature of perception and the basics of color photography. However, what both articles lack is a more robust examination of the nature of visual perception. That is what I hope to provide here so that some of the arguments swirling around these topics may become easier to navigate.

First things first though–I am not going to sugar-coat it–visual perception is not an intuitive subject by any stretch of the term. In fact, arguably, one of the reasons that the system works so well is that we operate intuitively believing that it is doing something that it is not. Specifically, we intuitively think of visual perception as providing an objective window to the world. Unfortunately, as George Berkeley pointed out several centuries ago, sources underlying visual stimuli are unknowable in any direct sense. What this means is that the percepts generated by a biological vision system are not accurate, objective recordings of the environment. The reality is that the chasm between the physical world and our perceptions of it is significant and as such, we need to acknowledge that what we “see” is a construct of evolved biology—not an accurate, objective measurement of an external reality. The mechanics of the visual system should not be confused with devices that can garner reasonably accurate measurements of the physical world (e.g. calipers, light meter, spectrophotometer, etc.) Rather, the visual system responds to stimuli based on experience-cultivated neural networks in an effort to yield successful behavior. It is not an external reality that composes the image we “see”–rather it is the biology of the observer.

So let’s explore some of our modern picture of this complex, and still very mysterious, biological system and see if we might better evaluate the aforementioned author’s claims of objective perception. Visual perception can be defined as the ability to interpret the surrounding environment by processing information that is contained in visible light. It is a fascinating process that takes up about 30% of our brain’s cortex. While there is much that we have come to understand about this remarkable ability, there is a great deal of mystery still to be solved. Unfortunately, as stated earlier, very little about our vision system is intuitive. In fact, it is not uncommon to find people that intuitively think of the eyes as tiny cameras that record accurate, clear pictures that are eventually sent on to some inner region of the brain where a small “inner self” or homunculus (a Greek term meaning“little man”) awaits to review the image. What’s more is that even though some can push past the “homunculus fallacy,” they still manage to get mired, as we have seen here, in the idea that vision is (at least in some part) veridical (objectively truthful, corresponding to objective measurement or fact.) While current evidence continues to mount which refutes this idea, it does not stop many from arguing for the veridical nature of vision. One argument that I have encountered regarding this misconception recently was from a colleague who insisted that it is very likely that vision may become more accurate/veridical when percept properties reduce in complexity. Unfortunately, this argument would be akin to one which claims that the accuracy of one’s “clairvoyance” increases among pick-a-card prediction tasks when the number of cards in play is reduced. With this reasoning, if we limit the pile to one card–one would be likely to hit 100% accuracy.

Today, a growing body of interdisciplinary research adds weight to the idea that our visual percepts are generated according to the empirical significance of light stimuli (empirical information derived from past experience), rather than the characteristics of the stimuli as such. In other words, The vision system did not evolve for veridicality–but rather for evolutionary fitness. To this point, Neuroscientist Dale Purves states,“…vision works by having patterns of light on the retina trigger reflex patterns of neural activity that have been shaped entirely by the past consequences of visually guided behavior over evolutionary and individual lifetime. Using the only information available on the retina (i.e., frequencies of occurrence of visual stimuli, light intensities), this strategy gives rise to percepts which incorporate experience from trial and error behaviors in the past. Percepts generated on this basis thus correspond only coincidentally with the measured properties of the stimulus for the underlying objects.”

Support for this idea comes not only from fields like perceptual neuroscience and modern vision science but from several other realms of inquiry. Donald D. Hoffman, a professor of cognitive science at the University of California, Irvine has spent the past three decades studying perception, artificial intelligence, evolutionary game theory, and the brain. His findings indeed lend significant support to the idea that visual perception is indeed non-veridical. In a 2016 article with Quanta Magazine, Dr. Hoffman states, “The classic argument is that those of our ancestors who saw more accurately had a competitive advantage over those who saw less accurately and thus were more likely to pass on their genes that coded for those more accurate perceptions, so after thousands of generations we can be quite confident that we’re the offspring of those who saw accurately, and so we see accurately. That sounds very plausible. But I think it is utterly false. It misunderstands the fundamental fact about evolution, which is that it’s about fitness functions — mathematical functions that describe how well a given strategy achieves the goals of survival and reproduction. The mathematical physicist Chetan Prakash proved a theorem that I devised that says: According to evolution by natural selection, an organism that sees reality as it is will never be more fit than an organism of equal complexity that sees none of reality but is just tuned to fitness. Never.” Furthermore, in regards to the hundreds of thousands of computer simulations run by Dr. Hoffman and his research team, he states, “Some of the [virtual]organisms see all of the reality, others see just part of the reality, and some see none of the reality, only fitness. Who wins? Well, I hate to break it to you, but perception of reality goes extinct. In almost every simulation, organisms that see none of reality but are just tuned to fitness drive to extinction all the organisms that perceive reality as it is. So the bottom line is, evolution does not favor veridical, or accurate perceptions. Those perceptions of reality go extinct.”

But the counterintuitiveness of the vision system is not limited to the big picture (pardon the pun.) Even at the earliest steps through the vision process, we find one counterintuitive factor or scenario after another. For example, did you know that when light energy interacts with our specialized light-sensitive receptors, they respond with less activity instead of more? (in other words, our light-sensitive cells (photoreceptors) are far more active in the absence of light!) Or How about the fact that all of the biological machinery that effect processes downstream from those receptors is found upstream (getting in the way of the light)? And while many of you might be aware that the incoming light patterns are inverted and reversed on the retina, did you know that those patterns must first pass through a dense web of blood vessels that you have perceptually adapted to? It’s true. So let’s now move to look at some of the observable machinery and processes that facilitate our perceptions so that we may better appreciate, dissect, or navigate this topic.

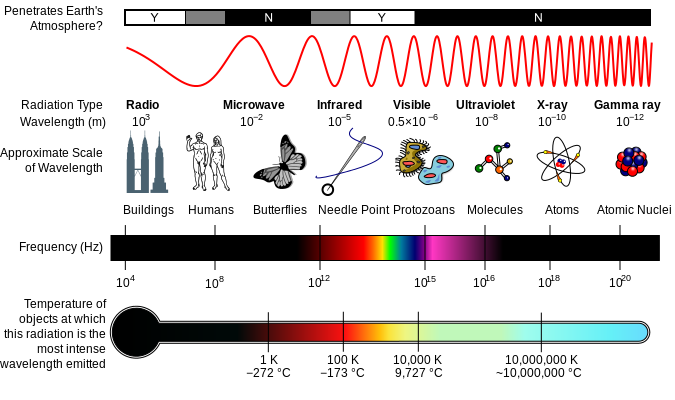

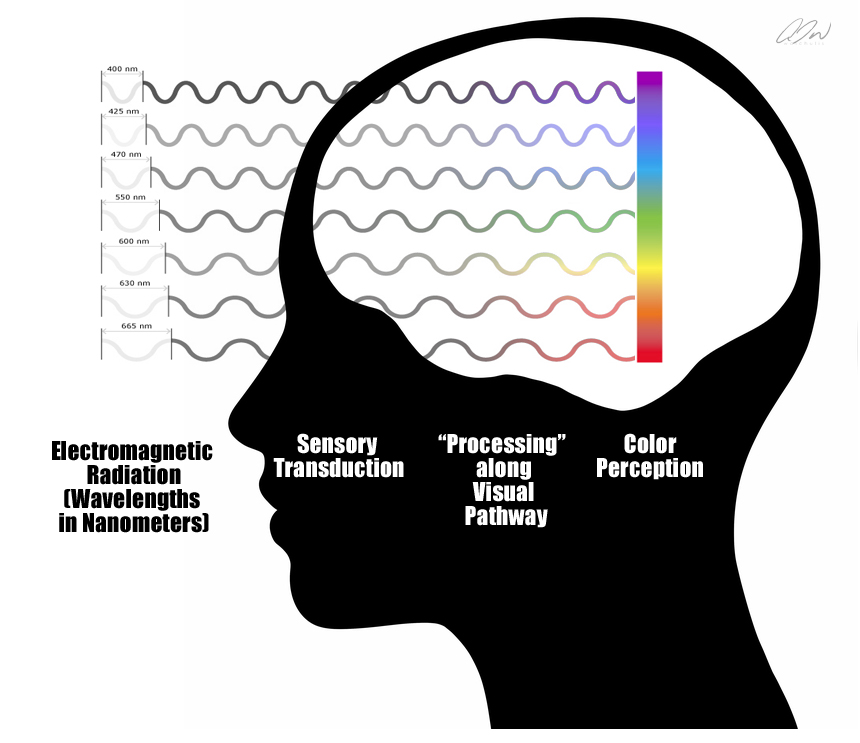

Let’s begin with light. As I put forth earlier, visual perception can be defined as the ability to interpret the surrounding environment by processing information that is contained in visible light. This term, visible light, describes a portion of the electromagnetic spectrum that is visible to the human eye. The electromagnetic radiation in this range of wavelengths is called visible light or more simply, light. A typical human eye will respond to wavelengths ranging from about 390 to 700 nanometers.

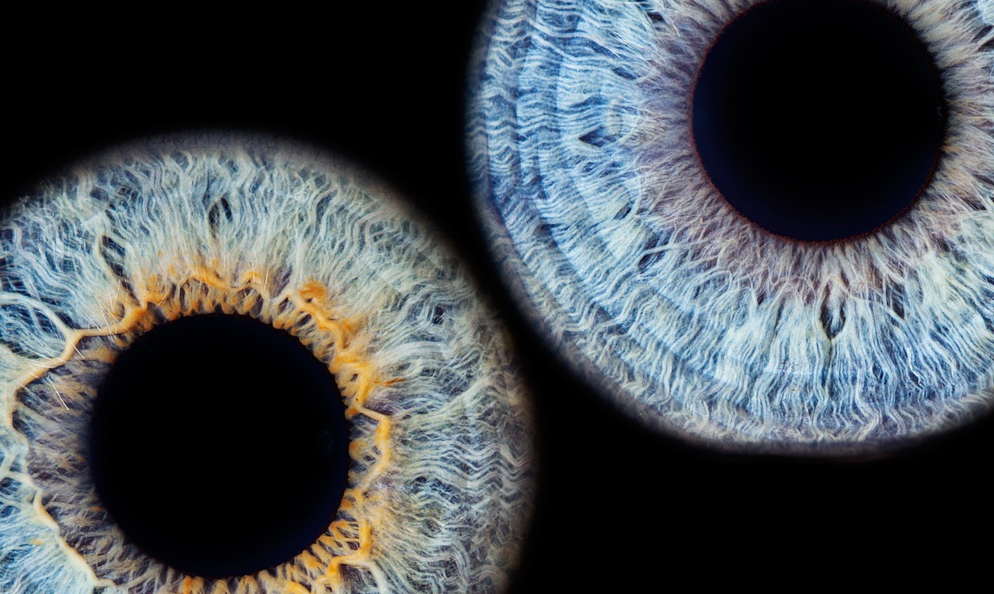

Visible light rays enter the eye through a small aperture called the pupil. A dome-shaped transparent structure covering the pupil (called the cornea) assists in this entrance, and with the help of a biconvex lens right inside of the pupil, guides the light rays into a focused light pattern onto a region at the back of the inner eyeball called the retina. This tissue is the neural component of the eye that contains specialized light-sensitive cells called photoreceptors. It is these specialized photosensitive receptors that will convert the environmental energy (light) into electrical signals that our brains can use. This process is called phototransduction.

Now upon reading that last paragraph, some might be quick to state that thus far, the eye sort of sounds like a camera. I mean, the basic idea behind photography is to record a projected light pattern with an electronic sensor or light-sensitive plate that can be used to generate a percept surrogate. Generally speaking, in the case of the digital camera sensors (something often compared with the retina), each pixel in the sensor’s array absorbs photons and generates electrons. These electrons are stored as an electrical charge proportional to the light intensity at a location on the sensor called a potential well. The electric charge is then converted to an analog voltage that is then amplified and digitized (turned into numbers.) The composite pattern of data from this process (stored as binary) represents the pattern of light that the sensor was exposed to and may be used to create an image of the light pattern recorded during the exposure event. Additionally, a Bayer mask or Bayer filter (a color filter array) is placed over the sensor so as to collect wavelength information at each pixel in addition to information regarding light intensity.

So is this how the eye or vision works? Do we simply use objective recordings of wavelength and intensity responses at each photoreceptor to produce a clear percept?

Hardly. There are indeed some similarities between the eye and a camera in terms of optics and photosensitive materials–but that is where any and all significant similarities end. Let’s take a look at some of the differences:

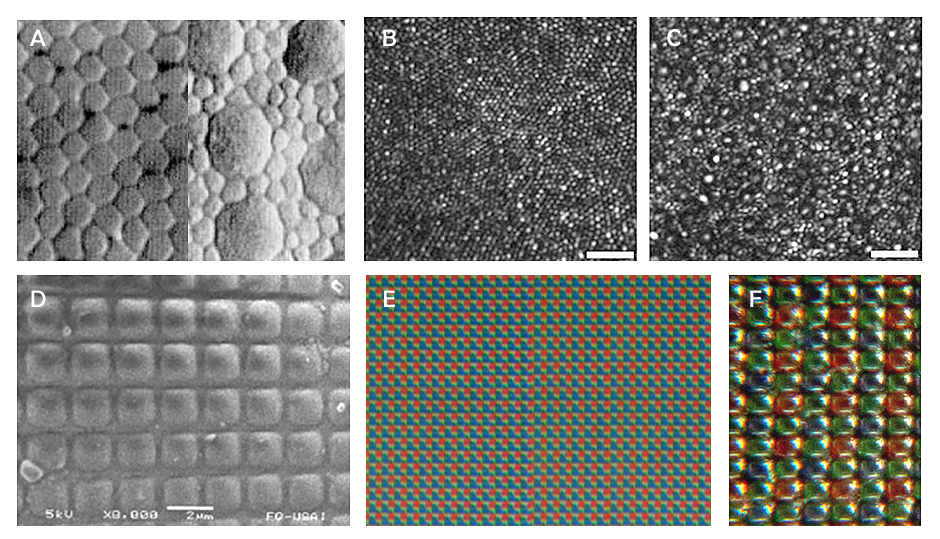

First, it is important to understand that our photoreceptor landscape is not anything like the uniform array of pixels found with a digital image sensor. The 32mm retina (ora to ora) has a very uneven distribution of photoreceptors. Two main types, known as rods and cones, differ significantly in number, morphology, and function as well as their manner of synaptic connection. Rods are far more numerous than cones (about 120 million rods to 8 million cones), are far more light-sensitive, provide very low spatial resolution, and hold only one photopigment. Conversely, cones are far fewer in number, less sensitive to light, provide very high spatial resolution, and come in three types with each type carrying a photopigment that is differentially sensitive to specific wavelengths of light (thus facilitating what we understand as color vision.)

Cones are present at a low density throughout the retina with a sharp peak within a 1.5mm central region known as the fovea. Rods, however, have a high-density distribution throughout the retina but have a sharp decline in the fovea, being completely absent at the absolute center of the fovea (a .35mm central region called the foveola.) To better appreciate the size of our high acuity window within the visual field resulting from this photoreceptor distribution you need only look to your thumbnail at arm’s length.

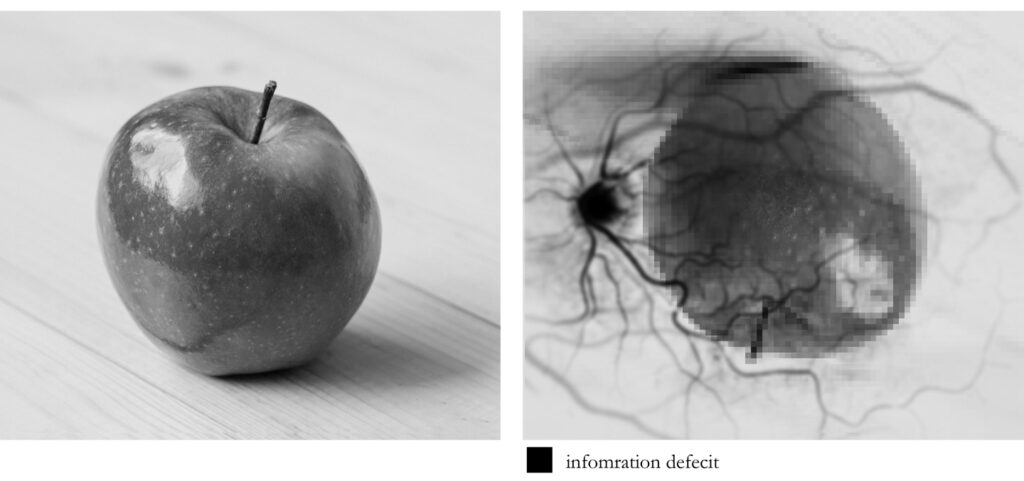

Another intuitive misconception worth mentioning here, in relation to acuity, is the idea that things get more and more blurry as we move outward from the fovea to the periphery. The truth is, this lower acuity does not yield image blur, but rather a spatial imprecision. Neuroscientist Margaret Livingstone offers a great demonstration on this point in her book Vision and Art: The Biology of Seeing. Here is a recreation of that demonstration:

Second, we need to look at what is happening with the output of our photoreceptors. It is very important to understand here that our rods and cones do not register some objective measurements of light intensity and wavelength as seen with the image sensor. Rather, responses from these specialized receptors trigger a cascade of highly dynamic, complex processing through multiple cell layers and a myriad of receptive fields. The resulting signals from this retinal activity are then ushered off to our next stop on the visual pathway–the thalamus. But before we head over to this well known “relay station” in the brain, I would like to present another issue at the level of the retina worth consideration when comparing a camera with the eye–the quality of that initial light pattern projection.

Do you remember the last time you took a picture with your camera when you had some debris or smudge on the lens? Did it ruin the shot you were trying to take? How about the last time you had to deal with a piece of tape keeping light from entering a part of the lens? Or the last time you pulled up a pile of plant roots to suspend in front of your camera before taking that nice portrait shot?

Do those last two questions seem a bit ridiculous? Well, those seemingly ridiculous factors represent some real issues that our visual system has to deal with early on in the perception process. As I mentioned when first describing the counterintuitiveness of the visual system, the human eye has evolved with all of the biological “machinery” used to process the output of the photoreceptor in FRONT of the photoreceptors themselves. That’s right–all of the incoming light has to pass through all of the cell layers that will process the output of the photoreceptors. Now while these cell layers themselves are not too much of a problem (as they are relatively transparent), a problem indeed arises when the signals from all of that machinery need to exit the eye.

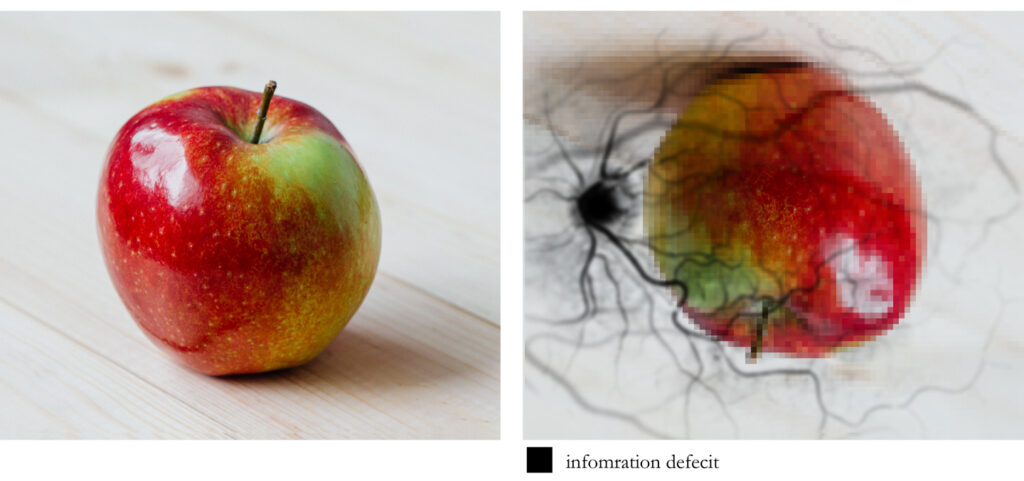

After the signals that arise from the photoreceptors make their way through all of the other cell layers, they will eventually reach the cells (ganglion cells) whose axons (long slender projections of nerve cells, or neurons, that usually conduct electrical impulses away from the neuron’s cell body or soma) will need to leave the eye in the form of a nerve bundle that we call the optic nerve. The region where this nerve exits the eye is called the optic disk and it indeed creates a deficit in our receptor array. This exit, or “blind spot”, is about 1.86 × 1.75 mm. Oddly enough, this deficit in our visual field measures slightly LARGER than our rod-free region of highest visual acuity.

If you like, you can even “experience” your blind spot with a simple exercise:

Another consideration worth mentioning here is in regards to something that you might have already noticed with the fundus photograph above–a rich web of blood vessels that populates the eye. As you might suspect, these little buggers are also in the way of the incoming light that is heading toward the retina. Now due to what we call sensory or neural adaptation (when a sensory stimulus is unchanging, we tend to stop “processing” it—like the way you don’t feel the clothes on your body after a bit), we don’t normally perceive these vessels, but by influencing the incoming light we can force this network to reveal itself. To do this you’ll need an index card, a pencil or something to poke a small hole in the index card, and a bright surface.

So, if we consider that initial light pattern suggestion that we often think is a clear window on the world, we need to consider that this “image” on the retina is inverted, left-right reversed, passing through ill-placed machinery that ultimately results in a relatively large blind spot, facing occlusion by a significant web of blood vessels, and all of this falling onto an unevenly distributed array of varied photoreceptors. So what might that look like?

Now many might be quick to intuitively think that this just can’t be true. The image I “see” is so rich and detailed, how could all of this “stuff” be in the way? Again, because visual perception does not involve any sort of clear window on the world. Take a moment to consider macular degeneration. This unfortunate, incurable condition is the leading cause of vision loss, affecting more than 10 million Americans–more than cataracts and glaucoma combined. It is the deterioration of the central portion of the retina, known as the macula (which contains the fovea and foveola.) It just so happens that because of the way our brain uses sensory data, the deterioration may simply go unnoticed, especially in cases with spotty macular cell damage or dysfunction, thus leading many to their ophthalmologist only when disease is fairly advanced. Our brain takes what sensory data it encounters and reflexively responds in a manner that we have evolved to find useful. I can’t stress enough how much visual perception is NOT like a camera taking snapshots via light intensity/wavelength measurements… nothing like it.

Oh, and I almost forgot! There really isn’t any “color” in this initial projection as color is not a property of the environment. Contrary to what some may believe, we do not sense color. While that may also sound counterintuitive–it is indeed true. Color is the visual experience that arises from our biology interacting with the spectral composition of the light (electromagnetic radiation) that is emitted, transmitted, or reflected by the environment. Our various photoreceptors DO respond differently to different wavelengths of light, thus resulting in an ability to discriminate different wavelengths, resulting in an experience of color vision, but it is just not a component of the early projection. So, perhaps a more “realistic” representation of what is falling on the retina might be this:

While we are on this color issue, exploring the counterintuitiveness of our vision system, and examining the comparison between a camera and the eye, I’d like to take a moment to share an interesting argument that I encountered not too long ago from, believe it or not, a professional artist who was absolutely convinced that color is indeed part of the environment. (I explore this idea in the above mentioned 2017 article.) No matter what evidence I was able to put forward to demonstrate that this was NOT the case, he would not budge from his position. When I asked him to present the evidence that justified his position, he stated that color MUST be a physical property of the environment “because a camera can record it.”

Now I am sure that a good number of you reading this have already realized the glaring fatal flaw in this argument, but for many individuals unfamiliar with the fundamentals of color vision and color photography, the argument definitely seems to have some teeth. However, like most intuitive arguments for a flat-earth, young earth, or intelligent design, such arguments quickly deteriorate with an increase in scientific literacy and critical thinking. Just to be clear though–with color photography, electronic sensors or light-sensitive chemicals respond to specific aspects of electromagnetic radiation at the time of exposure. The recorded information is then used to create a percept surrogate by mixing various proportions of specific light wavelengths (“additive color”, used for video displays, digital projectors and some historical photographic processes), or by using dyes or pigments to remove various proportions of the particular wavelengths in white light (“subtractive color”, used for prints on paper and transparencies on film). The color you are perceiving in a surrogate, like a traditional photograph or digital image, is being generated by the wavelengths of light emitting from the surrogate-not because the camera “captured” color like some fairy in the garden.

What might wrapping our head around this even easier is if we take a moment to clearly define two basic terms concerning the manner with which we interact with the environment, sensation and perception. These terms are often used synonymously–but they indeed describe two different aspects of what we normally understand as “sensory experiences.” Sensation describes a low-level process during which particular receptor cells respond to particular stimuli. At the level of sensation, our sensory organs are engaging in what is known as transduction (or in our case, as we mentioned earlier, phototransduction), or the conversion of energy from the environment into a form of energy that our nervous system can use

Perception, on the other hand, can be simply defined as the assignment of “meaning” to a sensation.

So what is actually happening when we visually encounter something that we might understand or describe as “blue”?

Putting aside scenarios in which the object may be an actual source of light, or a structural configuration that is bending light, it is likely that the surface of the object is absorbing all of the available wavelengths of the visible spectrum except for some that are relatively short. Now the standard human observer has a specific type of photoreceptor cell in the retina that is “tuned” for such short wavelengths. That means that when this particular cell type encounters this type of wavelength, it responds by initiating a complex cascade of electrochemical events that will eventually lead to more and more complex processes along a particular “route” we are currently exploring–the “visual pathway”. This low-level cascade initiation is what we could define as a sensation.

The cascade of events initiated in the retina will eventually lead to specific activity in other, “higher”, or more complex processing regions of the brain such as, but not limited to, the lateral geniculate nucleus of the thalamus, the striate and extrastriate cortex of the occipital lobe, and the temporal lobe (regions which we will be heading to soon in our visual pathway when our walkthrough continues). It is through the aggregate activity of these brain regions that we find a perceptual response–in our case here–”blue.”

So as you might already be starting to suspect, in this example, the object in the environment is NOT physically blue, the wavelengths reflected off the surface of the object are not physically blue, nor do the photosensitive cells referenced here “sense” blue. Blue is not a sensation–rather, it is a perception. We assign “blue” to a particular sensation that is a biological response to a certain wavelength.

Hopefully that makes some sense, clears up a few more counterintuitive factors, and allows us to continue our glimpse at a few key points on the visual pathway.

From the retina we will jump to what is often referred to as the main relay station of the brain known as the thalamus, or more specifically, a region of the thalamus called the lateral geniculate nucleus, or LGN. Information from both motor and sensory systems (with the exception of the olfactory system) relay signals through the thalamus where they are processed before being sent off to a myriad of cortical regions. Yet, the LGN does seem to be much more than a mere relay station or gateway to the visual cortex. It is a multi-layered array of sophisticated microcircuits that would seem to suggest a region of very complex processing. What makes this area even more fascinating is that only 10% of the inputs to this region are coming from the retinas. The other 90% are inputs from a number of cortical and subcortical regions including significant input from the primary visual cortex itself. With what we can observe anatomically, it would seem that this region might be a major site of top-down influence. In other words, it appears that the visual cortex may play a very large role here in controlling what is actually sent on to… the visual cortex. (Hopefully, at this point, many of you are starting to better appreciate just how unlike a camera this system really is.)

From here we will exit the LGN via a large fan of axons radiating outward, appropriately dubbed optic radiations, and land on the banks of (and somewhat within) the calcarine sulcus (or fissure) of the occipital lobe. The noticeably striated region of tissue is home to what is called the primary visual cortex. Here we find the most studied part of the visual brain.

The part of the occipital lobe that receives the projections from the LGN is known as the primary visual cortex (also referred to as visual area 1 (V1), as well as the striate cortex.) Immediately surrounding this region, above and below the calcarine sulcus are what we dubbed extrastriate regions. These regions consist of visual areas 2 (V2), 3 (V3), 4 (V4), 5 (V5 or MT) and 6 (V6 or DM). Now before you shudder expecting me to go into a large series of complex processes here–don’t worry. I am not. What I am going to do is jump forward to the cascades of neural activity that unfold from here furthering our understanding of this elaborate “pathway.”

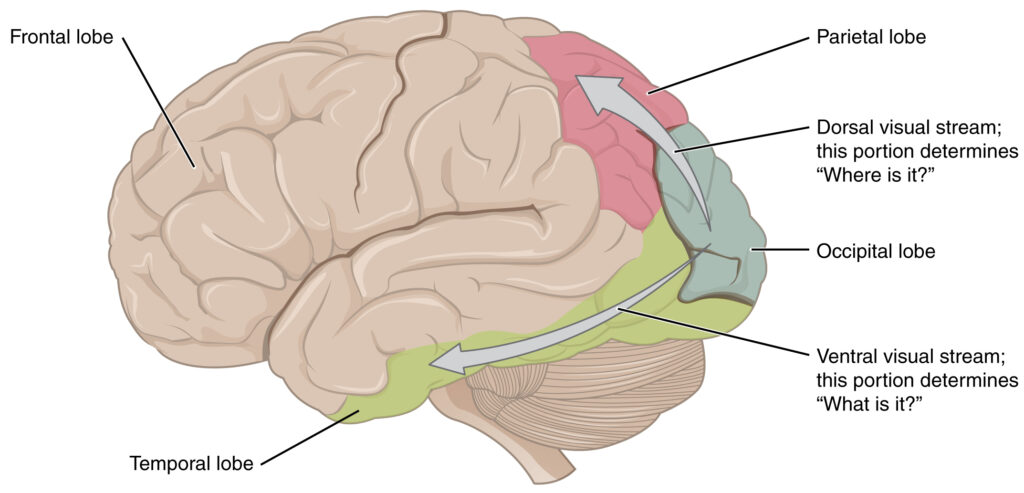

So where do things go from here? Each V1 (remember that we have two halves to the entire lobe here) transmits information to two distinct pathways or processing “streams”, called the ventral stream or “what” stream, and the dorsal or “where” stream. The information that is relevant to these two pathways is separate but remains physically integrated through much of the visual pathway. It is when this integrated information reaches “higher” levels that we see physical separation.

Anatomically, the ventral stream begins with V1, goes through V2, through V4, and then on to regions in the inferior temporal cortex (IT). This stream is often referred to as the “what” pathway as it is associated with form recognition and object representation. As such we find neurons in this stream that respond selectively to signals that might represent particular wavelengths of light, shapes, textures, and at the “highest” levels of this pathway, faces and entire objects. There are a number of regions within the inferior temporal cortex (ITC) that work together for processing and recognizing neural activity relevant to “what” something is. In fact, discrete categories of objects are even associated with different regions. For example, the fusiform gyrus or fusiform face area (FFA) exhibits selectivity for incoming activity patterns linked to faces and bodies while activity in the parahippocampal place area (PPA) helps us to differentiate between scenes and objects. The extrastriate body area (EBA) aids in distinguishing body parts from other objects while the lateral occipital complex (LOC) assists in discrimination tasks regarding the separation of shapes and “scrambled” stimuli. These areas all work together, along with the hippocampus, a dynamic memory region that is believed to be significantly involved in object “compare and contrast” tasks, in order to create an array of understanding of the physical world. This pathway also holds strong connections to the medial temporal lobe ( long-term memories), the limbic system (emotion), and the aforementioned dorsal stream. This stream has a lower contrast sensitivity compared to the dorsal and is somewhat slower to respond. However, it does have a slightly higher acuity than its counterpart and carries all information about what will eventually yield an experience of color.

The dorsal stream also begins with V1 and goes through V2, but then travels into the dorsomedial area (V6/DM), middle temporal region (V5 (MT)), and onto the posterior parietal cortex. The dorsal stream, often referred to as the “where” or “how” pathway is associated with motion and location. As such, within this stream one would find neurons that show selectivity, not for signals representing shape or light wavelength, but rather for signals that represent location, direction and speed. This stream is essentially “colorblind”, but has greater sensitivity to contrast, is quicker to respond (albeit more transient), and has a slightly lower acuity.

So, like with our blindspot, blood vessels, and the spatial imprecision of our periphery, can we see some demonstration of how these two streams process things differently?

You betcha.

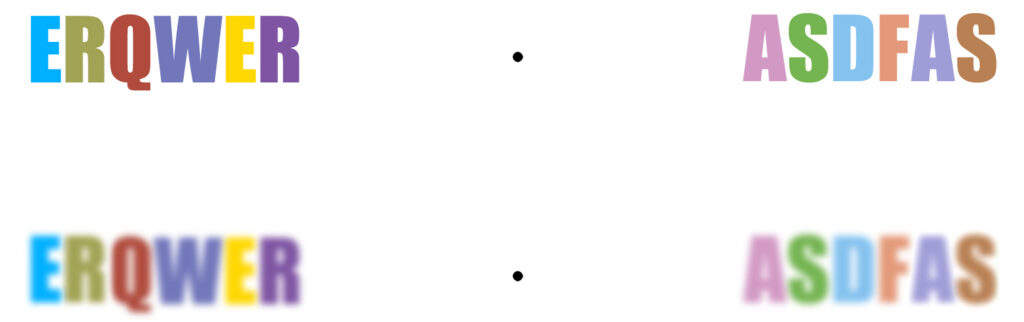

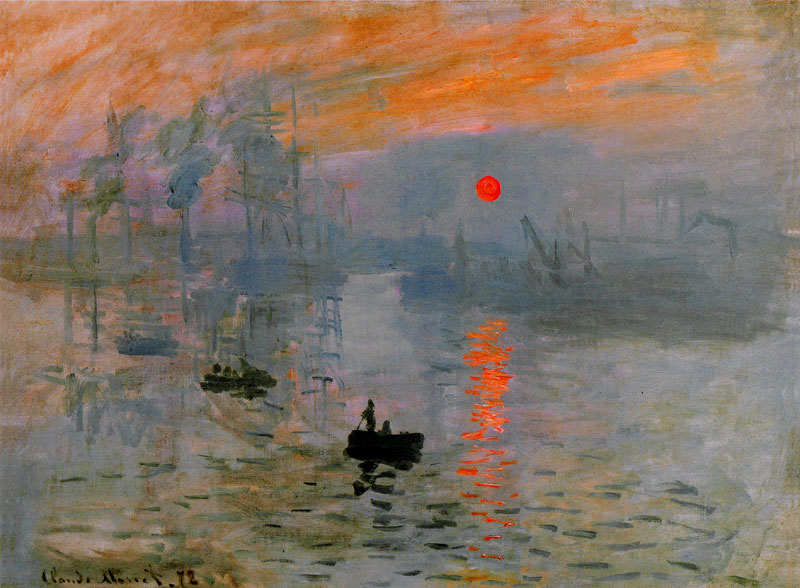

One of my favorite examples for this is to introduce a stimulus that sort of pushes the two streams out of balance. If we could make an object or image visible to only ONE of the streams then we might experience something quite interesting. And indeed we can–with color.

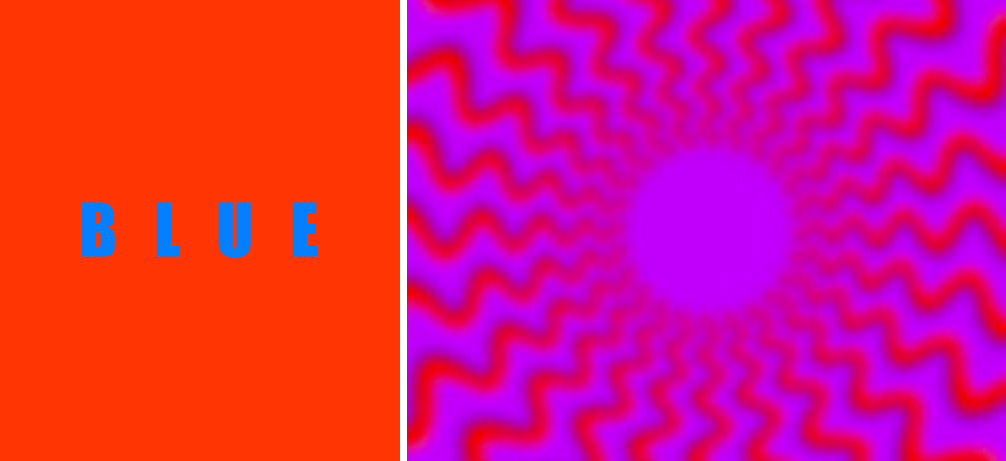

Remember that we stated above that the “where” stream is essentially colorblind. As such, we can present a stimulus that contains two or more colors that are perceived as reasonably equiluminant (appears to be of the same level of lightness or brightness.) Here is one such example:

Do you notice an odd visual shimmer, jitter, or vibration when trying to read the letters here? This is because while your “what” system can easily process the color contrast, the colorblind “where” system cannot. In other words, your “where” system is having a heck of a time trying to determine where the boundaries of those letters actually are.

One of the most famous examples of an artist exploiting this issue is Claude Monet’s Impression Sunrise. In the piece, many reported that the sun within the image appeared to “vibrate.”

Neurobiologist Margaret Livingstone explains the peculiarity of Monet’s equiluminant sun in her book Vision and Art: The Biology of Seeing. She writes, “The sun in this painting seems both hot and cold, light and dark. It appears so brilliant that it seems to pulsate. But the sun is actually no lighter than the background clouds, as we can see in the grayscale version. It is precisely equiluminant with–that is, it has the same lightness as–the gray of the background clouds. This lack of luminance contrast may explain the sun’s eerie quality: to the more primitive subdivision of the visual system (which is concerned with movement and position) the painting appears as it does in the grayscale version; the sun almost invisible. But the primate-specific part of the visual system sees it clearly. The inconsistency in perception of the sun in the different part of the visual system gives it this weird quality. The fact that the sun is invisible to the part of the visual system that carries information about position and movement means that its position and motionlessness are poorly defined, so it may seem to vibrate or pulsate. Monet’s sun really is both light and dark, hot and cold.”

And while you can find countless visual demonstrations in textbooks, classrooms, and websites (often labeled as illusions) demonstrating that what we see does not accord with an objective reality–I couldn’t pass up an opportunity to share one of my favorite, canonical examples for experiencing it–the simultaneous brightness/lightness contrast demonstration.

So at this point–with this limited glimpse at the mountain of interdisciplinary evidence available to us–I hope that you can better evaluate the claims that visual perception can be objective. And if that claim is false–so are those built upon it. I am hopeful that you, the reader, may consider using this paper as a reference when needed to better grasp some features of the visual pathway as well as some basic ideas about the nature of visual perception.

Before closing, I would like to briefly address two additional issues that are connected to the topics discussed here thus far. One is the idea that learning observational representationalism is in fact, “learning to see.” You will remember that our aforementioned artist and author, Virgil Elliot, continues to promote this idea. Unfortunately (or fortunately depending on how you might like to consider the idea), this is indeed not the case. While your perceptions can indeed be molded by experience and assumption, the practice of observational representationalism will not fundamentally change the nature of your vision system. What the practice CAN do is cultivate the relationships between specific visuomotor responses and specific types of visual information that are conducive to representational efforts. The second is an intuitive argument that was put forward in the online thread I started that I did not address adequately. I felt that the answer really needed to be in the context of the more robust explanations and insights that I hope I have provided here. The argument was that photograph (a percept surrogate) should be considered a “less objective” reference source due to the fact that a surrogate is one step removed from the actual subject (the live percept.) And while this may initially sound like a solid argument, it contains a fatal intuitive error. You see, the idea of objectivity in this context is describing the nature of the perceiving entity–not the subject of the perception. This is almost like arguing that the closer you are to a live piano being played, the more objectively you will perceive the sound. In actuality, you will respond to any perceptible, appropriate pressure waves in the manner that you and your species have cultivated. No perceived sound originating from a piano, whether you are 10 feet away from a Steinway or listening to a CD of your favorite ivory-key hits, will be more or less “accurately” objective. I hope that is clear enough now.

Again, as I stated in the 2015 article I mentioned, There are definitely problems to contend with when utilizing photography in the pursuit of representational painting or drawing. However, the points that are often put forward to argue against the use of photography in representational painting communicate more of a general misunderstanding of visual perception than anything else. Now I agree that there are some truly GREAT reasons not to use photography in specific painting and drawing scenarios. However, you must be aware of the goal or intention of the artist before you can effectively determine the advantage of any reference source. Yes, some photographic processes may have specific limitations—but those limitations may not exceed the advantages in all cases, across all contexts.

I hope that you have found this paper to be informative. I know some parts of it are very dry–but I wanted to offer a more robust resource than what I have been able to provide in a flurry of comment fields on social media. Please let me know if you find any factual errors in what has been provided here. I would also like to invite anyone that was referenced in this paper to feel free to submit any corrections, clarifications and/or refutations for inclusion here.

Best wishes all!

This is an interesting read. To start off, I completely agree that the visual system is unlike the camera. After all, the camera doesn’t interpret the image, but I think that maybe here is where people diverge and talk about different things, because on one way, with the light-sensitive cells, you have something similar to a camera sensor, but that’s about where the analogy breaks down. If you go through the process of interpreting the sensor data, that’s where the brain function comes in and goes beyond the camera.

I guess what I’m saying is that when discussing these things we need to be super clear about what we are discussing. This reminds me of a heated discussion I had with a friend about color where his position was that color exists and is real and I had the position that we create color in our brain, through interpretation. I think both explanations have merit and I’d say that most of it is down to the imprecision of language. Because color does exist in a form, even if only in our imagination. Anyway, going back to the topic.

One issue that I might have with the idea that we don’t perceive reality or objectivity… I find that somewhat misleading. I once saw this talk on TED how we live in a controlled hallucination and it’s true, given that, as you mention, we really construct data in our head, through neural networks and past experiences.

On the other hand I’m also following this Two Minute Papers YT channel where I regularly see how AI networks perform better physics than actual simulations of physics. That’s because AI is concerned about the outcome while the simulations are concerned with correctness of theory in some way. It’s actually remarkable if you think about it, that we have neural networks performing better and faster than actual theory.

This brings us back to the article. Although we live in a subjectively constructed controlled hallucination, I think it’s a stretch to say that we can’t observe objective reality. That would mean that we wouldn’t have any way to develop science as an objective standard for interpretation I would say. That’s the only part I’m not completely sold by the article.

Other than that, I’m so excited that this field is gaining traction. Thanks a bunch for putting this blog together!

Thank you for sharing your thoughts here Razcore. I agree that language can often be a major stumbling block when navigating these topics. For example, “ that’s where the brain function comes in and goes beyond the camera”—we can wax pedantic here and begin to argue “goes beyond.” Such a phrase in this context implies that both the camera and our biological perception system share a similar goal (which I would argue they do not.) As such, “goes beyond” doesn’t really make much sense. I cannot tell you how many times language has derailed an otherwise productive conversation which is why I often try to carefully define terms at the onset of most discussions.

The color issue that you were discussing with a colleague is a common one. You are both technically correct in that color is a real, biological response to certain environmental stimuli. The “color” does not exist independently of the viewer—although the electromagnetic energy that elicits such a response definitely does. However, again, colloquially—we can talk about mixing colors on a palette and the vast majority would understand what you mean. However, this colloquial heuristic is technically scientifically inaccurate.

To this issue: ”Although we live in a subjectively constructed controlled hallucination, I think it’s a stretch to say that we can’t observe objective reality. That would mean that we wouldn’t have any way to develop science as an objective standard for interpretation I would say. That’s the only part I’m not completely sold by the article.” –I would argue that the statement is a non-sequitur. For example, the icon of a research paper on your computer desktop is a representation that you can interact with. You are not objectively or veridically seeing the nature of that file that the icon represents (in terms of voltages)—but rather as an icon within an interface that you can interact with. Just because you cannot see the research paper veridically does not mean you cannot have consistent, accurate, and precise interactions with it that can be demonstrated as useful. It is very difficult for us to get the idea in our head that the icon is not the true objective “thing” but a representation. Such are the products of a biological vision system. It produces evolved responses that selection pressures have deemed useful. And useful, evolutionarily speaking, is far more advantageous than objective.

I think you misunderstood my statement about objectivity – speaking of language getting in the way :). I’m actually right there with you, about the icon being just a representation.

I was talking about something else, that perhaps I understood incorrectly. Namely that the icon is a representation of something objective and it’s not simply an evolutionary response. It’s something that we can subject to testing and draw “objective truth” from.

But anyway, I don’t think we need to get into it. I wish we had a better way to express ourselves to minimize these misunderstandings.

Gotcha Razcore! Language can really be a bugger. I think you have the right mindset to effectively navigate these issues though as you do seem sensitive to the misunderstandings that can arise.

Exploring terms like objective and truth could really be Sisyphean as, language aside,–there are indeed many non-productive, esoteric snares ranging from hard solipsism to appeals to absolute certainty.

With that said–I do hope you will not hesitate to share any additional thoughts or critiques on these (or related) issues (Sisyphean or not. LOL!)